Artificial intelligence (AI) is transforming industries like healthcare, finance, autonomous vehicles, and entertainment. From powering large language models like ChatGPT to enabling advanced computer vision, AI workloads require immense computational resources. At the center of this revolution is the GPU (graphics processing unit). Understanding what is GPU and why does it support AI is essential for anyone aiming to leverage AI effectively. The GPU is a vital AI accelerator for parallel computing performance and efficient AI hardware.

While CPUs (central processing units) are essential for general-purpose computing, GPUs excel in high-throughput, repetitive tasks, making them indispensable for deep learning and large-scale AI training. This guide covers GPU architecture, benchmark comparisons, cost-benefit analysis of cloud vs on-premise GPUs, common mistakes, buyer’s checklists, and future trends.

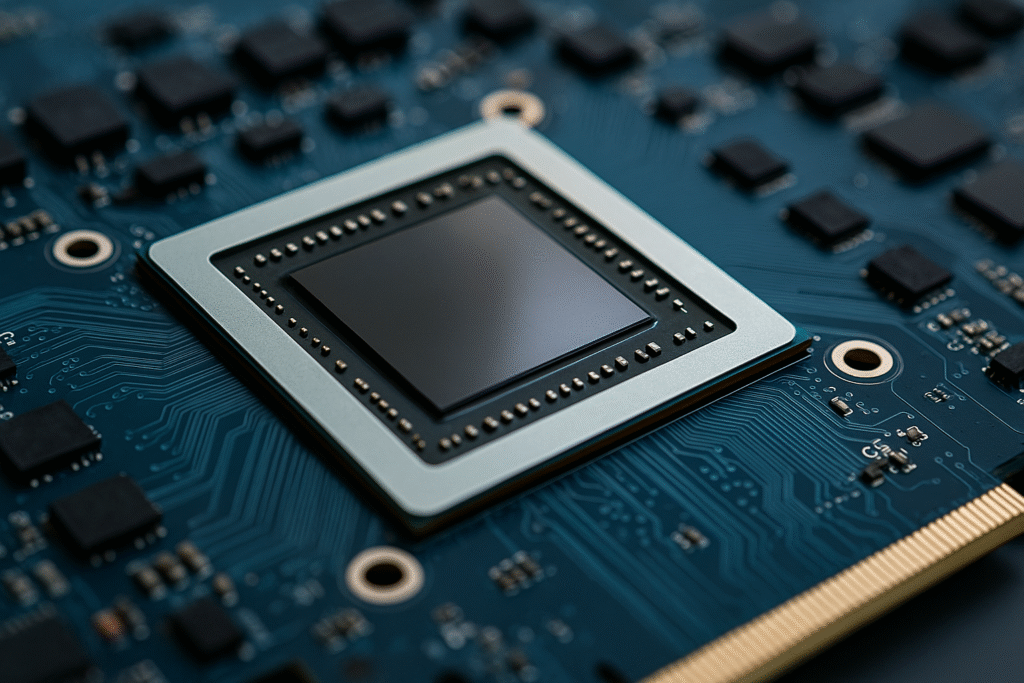

1. Understanding the Graphics Processing Unit (GPU) in AI

A GPU is a specialized processor originally designed for rendering graphics but now optimized for AI and machine learning. Modern data center GPUs deliver exceptional graphics processing unit performance, with massive parallelism enabling thousands of simultaneous threads. For anyone asking what is GPU and why does it support AI, the answer lies in its design for high-speed, parallel computation and its ability to accelerate complex workloads beyond the capabilities of traditional CPUs.

Key Features for AI:

- Massive Parallelism: Thousands of cores for faster AI model training.

- High Memory Bandwidth: Fast data transfer between VRAM and GPU cores.

- Specialized AI Cores: Tensor cores accelerate matrix multiplication for deep learning.

- Programmable Ecosystem: CUDA, ROCm, and OpenCL support custom workload optimization.

Definition Note: FP16 is 16-bit floating-point precision and INT8 is 8-bit integer precision, both used to speed up AI computation while saving memory.

2. GPU vs CPU: Which is Better for AI Performance?

| Feature | CPU | GPU |

| Core Count | 4–64 high-performance cores | Thousands of parallel cores |

| Task Type | Sequential tasks | Parallel, repetitive tasks |

| AI Training Speed | Moderate | Up to 100× faster for large datasets |

| Efficiency | Lower for AI | Optimized for matrix operations |

Why GPUs Lead:

- Built for parallel computing for AI

- Optimized for matrix operations in neural networks

- AI hardware acceleration like tensor cores

- Scalable in multi-GPU setups

3. How GPUs Accelerate AI Workloads

- Batch Processing: Handles large datasets in parallel

- Tensor Operations: Deep learning-optimized hardware

- Mixed Precision Training: Uses FP16 or INT8 for efficiency

- Energy Efficiency: AI-driven power management adjusts clock speeds

Case Study: GPT-4 training involved thousands of GPUs, cutting weeks off training time (source).

Cost-Benefit Insight: Cloud GPU rentals reduce upfront costs but may be more expensive long-term than on-premise GPU investments.

4. GPUs in Machine Learning and Deep Learning

- Training: Handles billions of parameters

- Inference: Delivers real-time AI results

- Edge AI: Powers drones and mobile diagnostics

Benchmark Example: NVIDIA H100 outperforms AMD MI300X in FP16 workloads.

5. Choosing the Right GPU for AI: Buyer’s Guide

Buyer’s Checklist:

- VRAM capacity

- Tensor core availability

- AI framework compatibility

- Scalability

- Cooling and energy needs

Common Mistakes to Avoid:

- Ignoring VRAM needs

- Overlooking framework compatibility

- Underestimating cooling requirements

6. Alternative AI Accelerators

- TPUs: TensorFlow-optimized

- NPUs: On-device inference

- FPGAs: Custom AI tasks

7. Real-World Applications of GPUs in AI

- Training LLMs like GPT-4

- AI medical imaging

- Autonomous vehicles

- E-commerce personalization

- Predictive maintenance

- Generative AI for video and 3D content

8. Cloud GPU Services and Platforms

Platforms like AWS EC2 P4, Azure NC-series, and Google Cloud AI Platform provide scalable GPU access.

Pro Tip: Spot instances save costs for non-critical jobs.

9. The Future of GPUs in AI

- AI-optimized cooling systems

- Photonic interconnects

- Hybrid GPU-TPU-NPU architectures

- Edge-optimized GPUs

10. Why Understanding What is GPU and Why Does It Support AI Matters

This concept is more than a technical definition; it’s a strategic advantage. By knowing what is GPU and why does it support AI, developers and organizations can design workflows that maximize training efficiency, optimize inference speed, and reduce operational costs. Whether deploying models on edge devices or training massive LLMs in the cloud, this knowledge bridges the gap between AI ambition and achievable results.

Conclusion & Key Takeaways

- Understanding what is GPU and why does it support AI is key to unlocking its full potential in deep learning and AI scalability.

- GPUs outperform CPUs in AI training, inference, and distributed computing.

- Cloud and hybrid GPU options make enterprise AI more accessible.

Call to Action: Share your experiences with GPUs in AI projects in the comments or discussion forums, explore additional resources to deepen your understanding, and consider testing different GPU configurations to see firsthand how they impact your AI performance.